This is part of the blog series about building a Co-Pilot for DB Ops. The journey starts here.

Thesis:

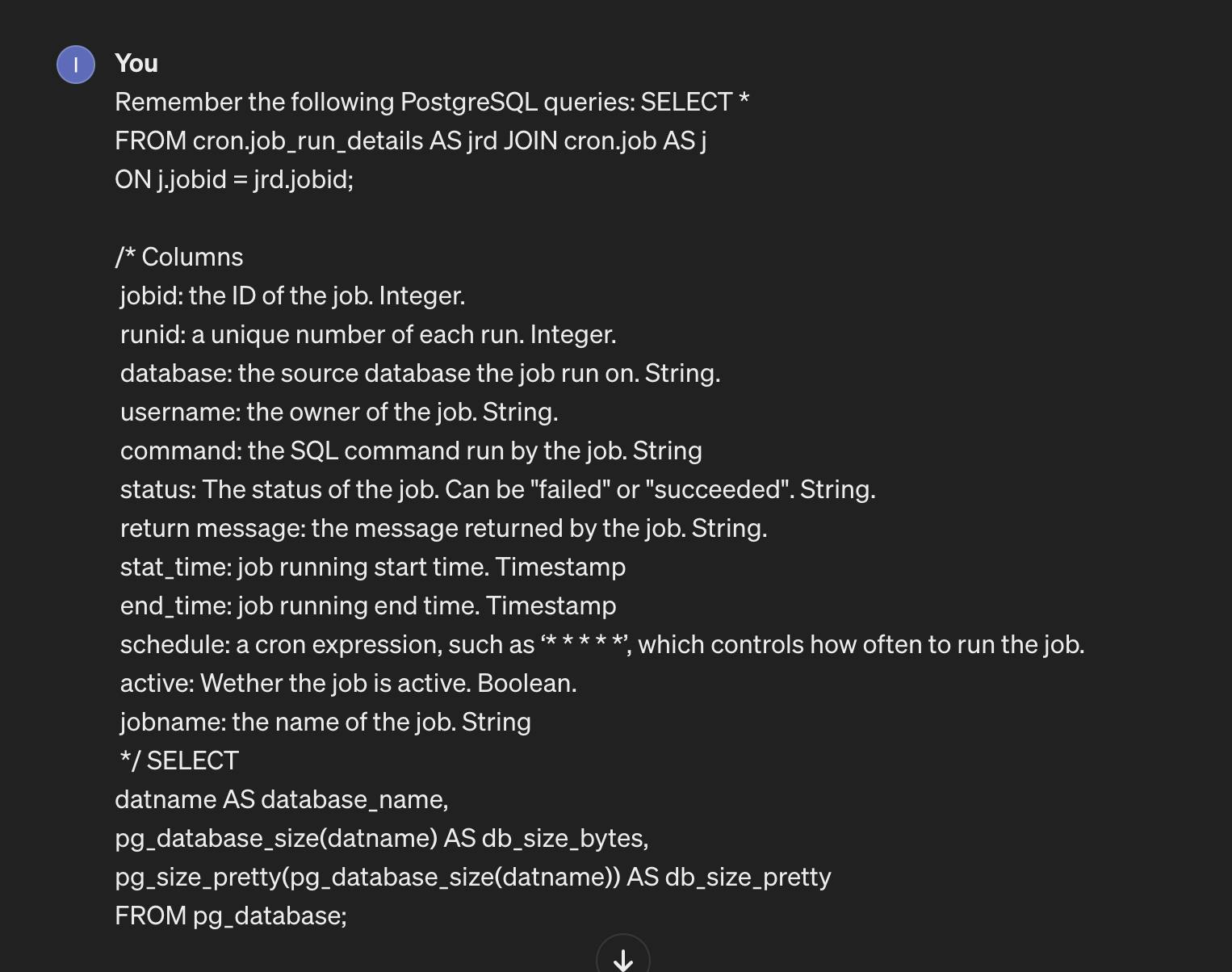

Sending detailed documentation of every column will ensure high quality SQL.

Sending a long context, with detailed information about the schema of the tables and sample queries, will ensure high quality SQL. When using an text2sql tools, on a schema of your organization, which the LLM obviously never saw before, you must provide explanations about every columns or get useless results. So probably we'll have to do the same for the system tables (inforamation_schema, performance scheme...)

More details:

Provide the context in the prompt (Remember the following PostgreSQL queries....)

Ask ChatGPT to write a query

Test it manually.

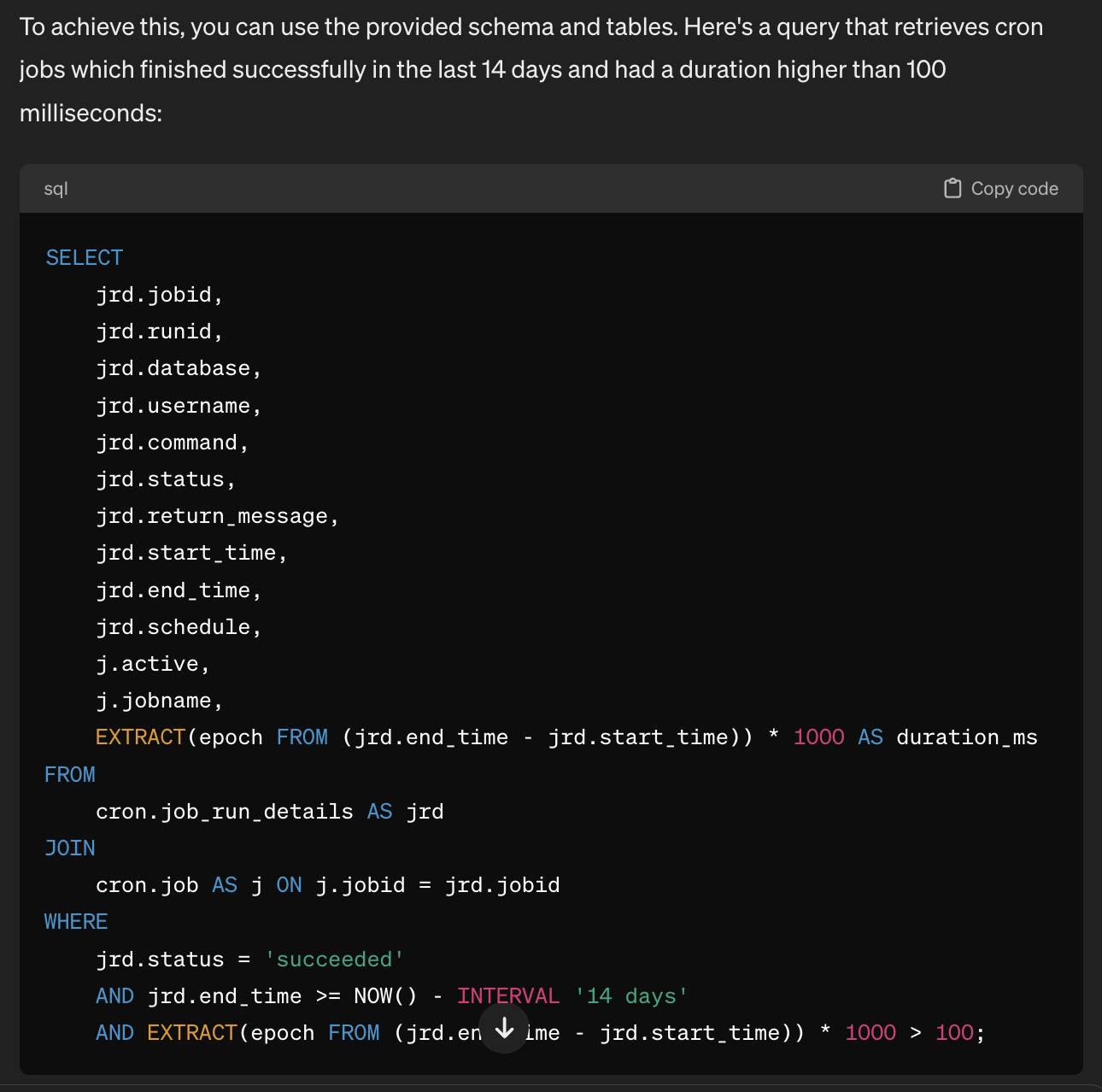

Prompt: Write a query to show the cron jobs which finished successfully in the last 14 days, with a duration higher than 100ms.

Conclusion

The results are much better. I need to investigate in this direction.

The context window can easily reach 50,000 tokens. One small query generated 200 tokens (https://quizgecko.com/tools/token-counter).

Sending a large context window to the API might be expensive. Is there a way to send the context once and use a "memory" of the API? The answer is not "Of course". It is more complicated.

Sending so much text might affect performance => bad UX

Thesis:

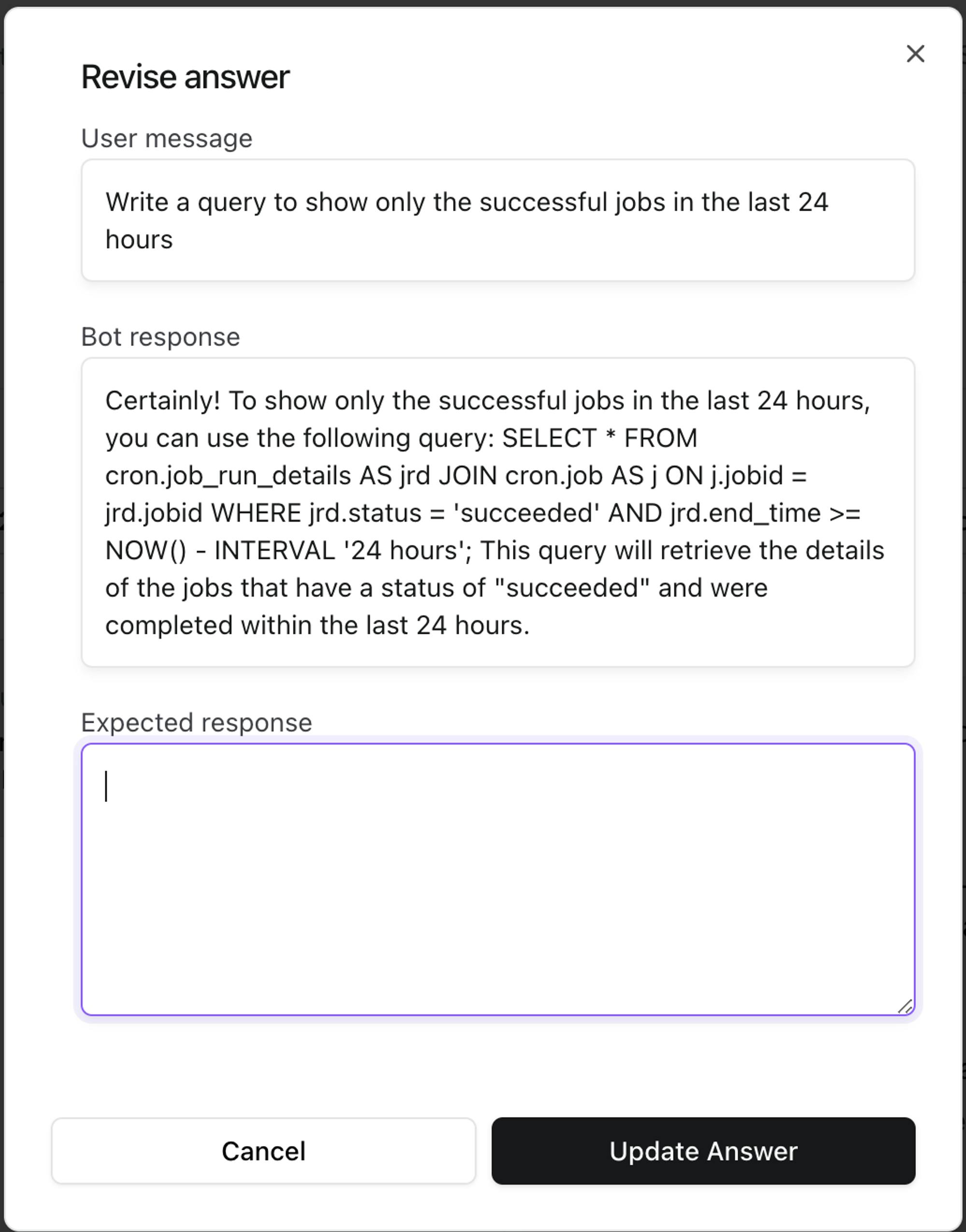

A No-code chatbot should generate high quality SQL

The next step is using a chatbot generator and train it with many detailed SQL commands that I document.

There are dozens of options out there, I decided to try https://www.chatbase.co/.

Chatbase let you train a model, for free, using a page in Notion.so. Which is perfect for this early step of investigation. So I wrote 10 well documented queries, covering different aspects of DB Ops and created a chatbot in a few clicks.

If the test goes well, the next step will be start using an API. The API will return only the generated SQL for the Co-Pilot to run and show the results.

Conclusion

I need to Improve the IP - write a long list of well documented examples of how to retrieve the data.

Use an API that can "learn" (RAG) from the examples I'll provide. An LLM dedicated for SQL queries should give us good results, fast, in reasonable price.

Spoiler alert: not that easy...