This is part of the blog series about building a Co-Pilot for DB Ops. The journey starts here.

Testing GPT-3.5 as a Copilot: Navigating Uncharted Territory

In software testing, we usually have clear plans for testing regular programs. But trying out new things like Language Models (LMs), such as GPT-3.5 as a copilot, brings different challenges and chances to learn. Since building a Co-Pilot is unlike anything else that I did, I plan to suggest a thesis (simple test) with a clear goal and then write the conclusion.

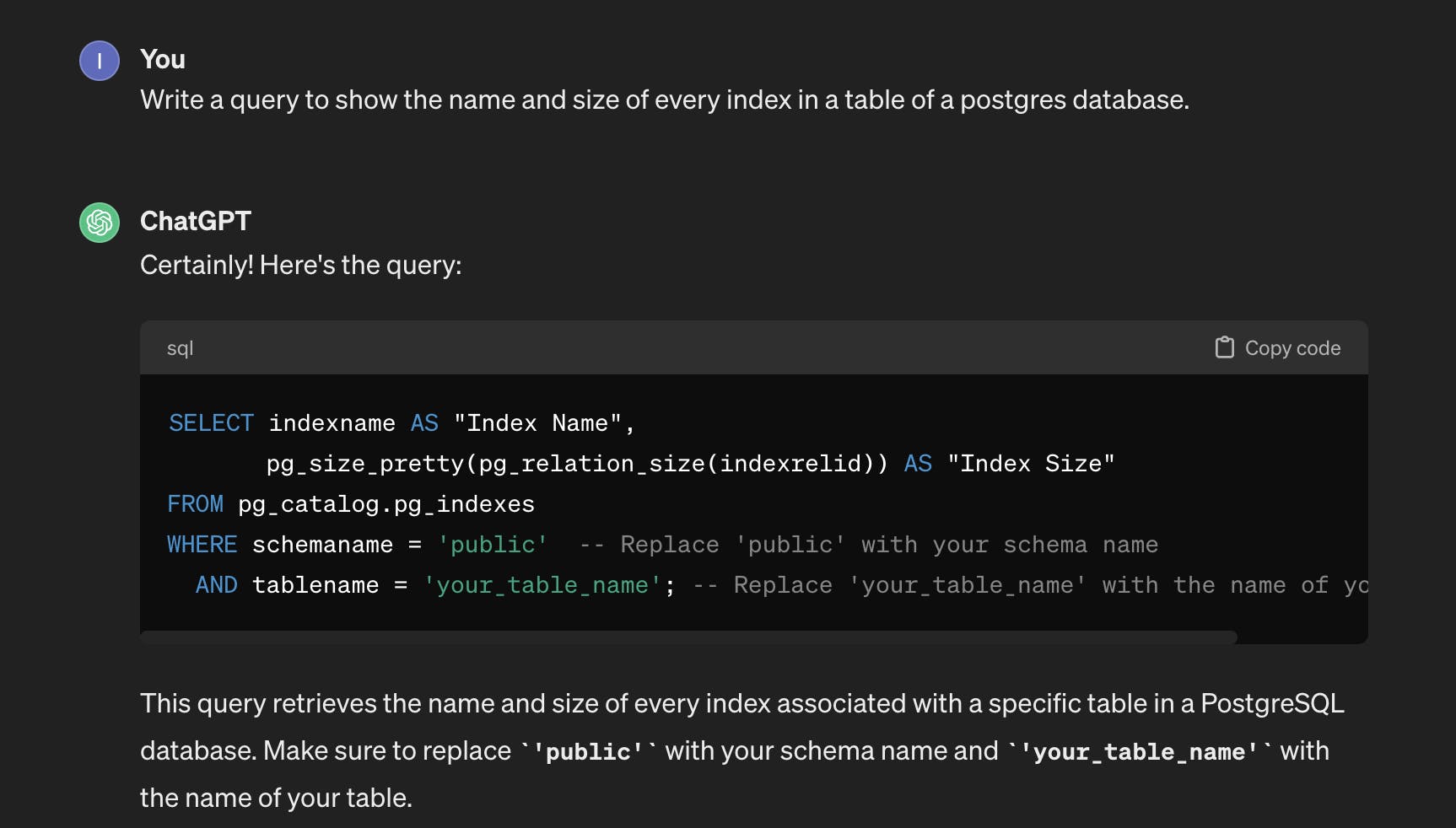

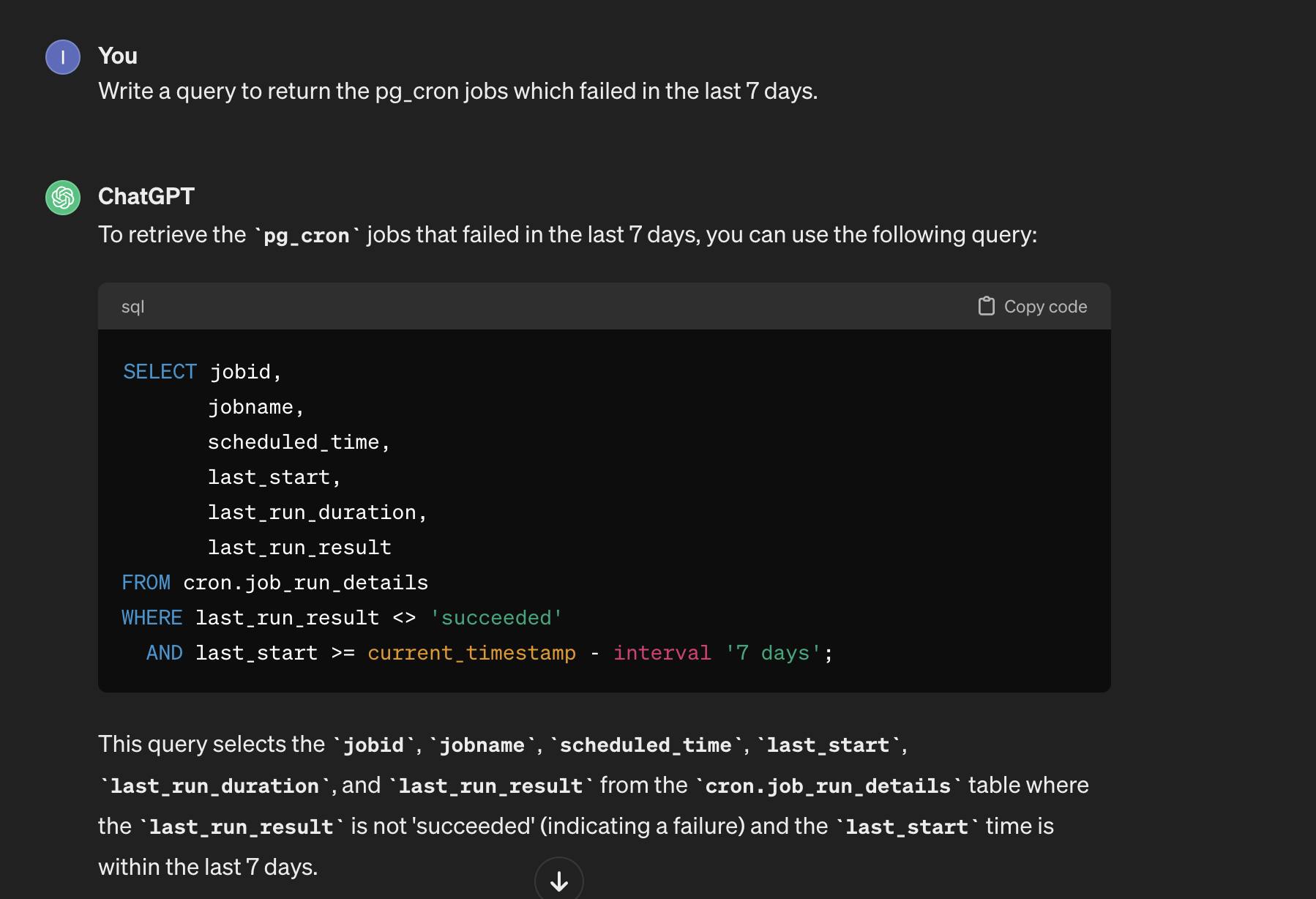

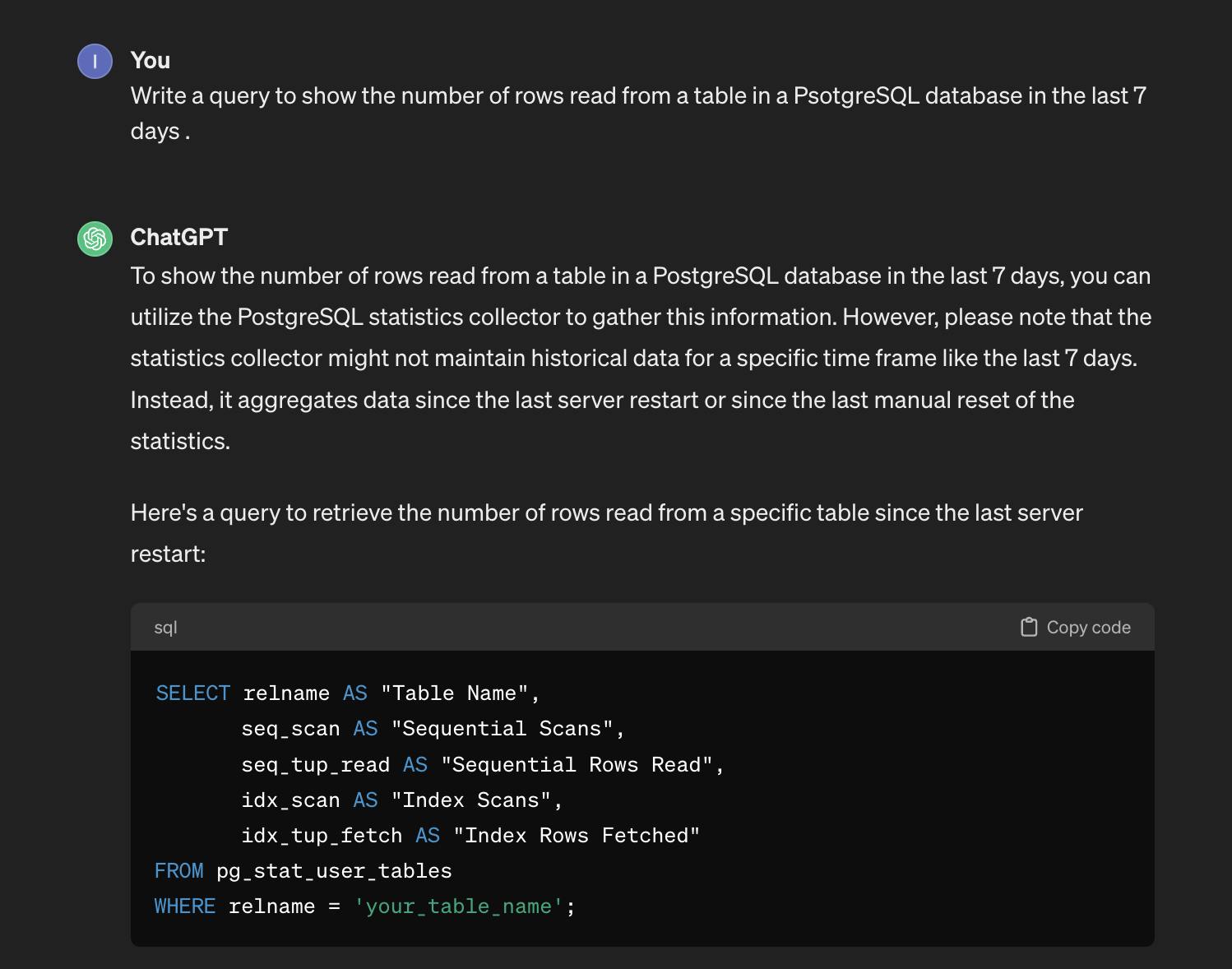

The plan is to assess ChatGPT 3.5's proficiency in generating SQL queries from natural language instructions. If ChatGPT performs satisfactorily, we'll proceed to test the API. The approach involves employing "zero shot" methodology: simply pose the question (prompt) and manually paste it into an SQL IDE (e.g., DBeaver, compatible with both PG and MySQL) to validate that the SQL query functions correctly and yields the desired data.

Thesis - Zero Shot

During its training, the LLM learned the structure of system tables in Postgres and MySQL, enabling it to generate effective SQL queries.

Conclusion

ChatGPT and the OpenAI API do NOT generate good SQL queries; they often hallucinate nonexistent tables and columns. Additionally, they lack knowledge of valid values within system tables.

Similar tests conducted on Google Gemini (Bard) and Claude yield similar outcomes. While it's not a complete failure, it falls short of being useful.

Example 1: the query fails for using non existing column

Example 2: non existing columns

Example 3: table size . This works! It managed to understand how to convert to MB.

Example 4: Ask for an information the DB can't provide. It works. The LLM explained that it can't provide the information. But it did return a query. Probably a better prompt is needed.

Example 5: I inquired about the CPU usage. Although the query executes, it yields completely inaccurate data, which is unacceptable.

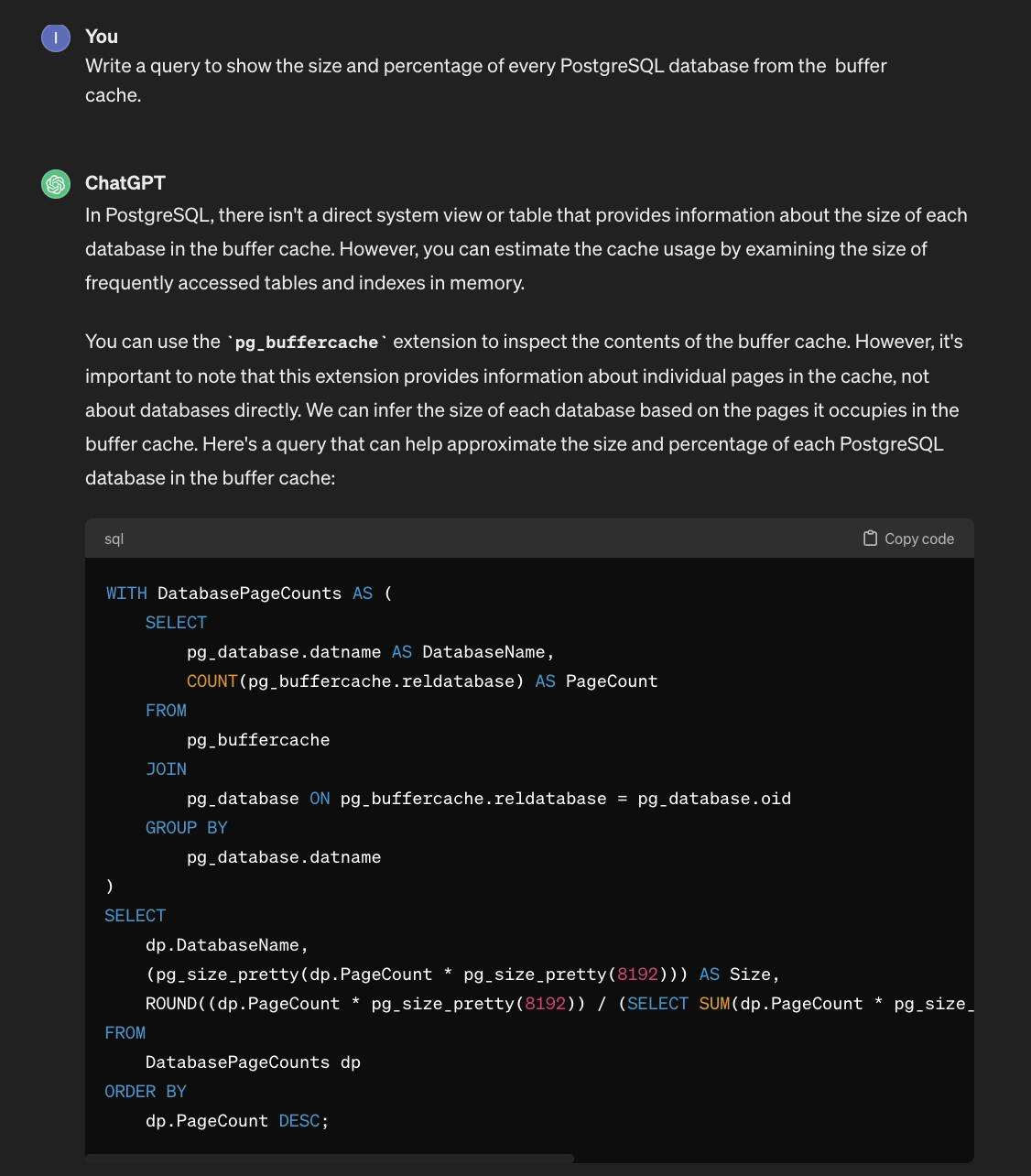

Example 6: Size of buffercache, grouped by database. The query won't run.

I can go on with more 100s of examples for hours.

Thesis - Let the Co-Pilot fix the errors

Let the LLM fix the problems. Here I do everything manually, but I can easily write code that:

Run the request of the user

Extract the SQL from the result

If the execution of the SQL returns an error send it to the LLM and try again.

After a small number of failures (say 3-4) stop trying, to avoid an infinite loop

Rerunning the last example. Just pasting the error I got.

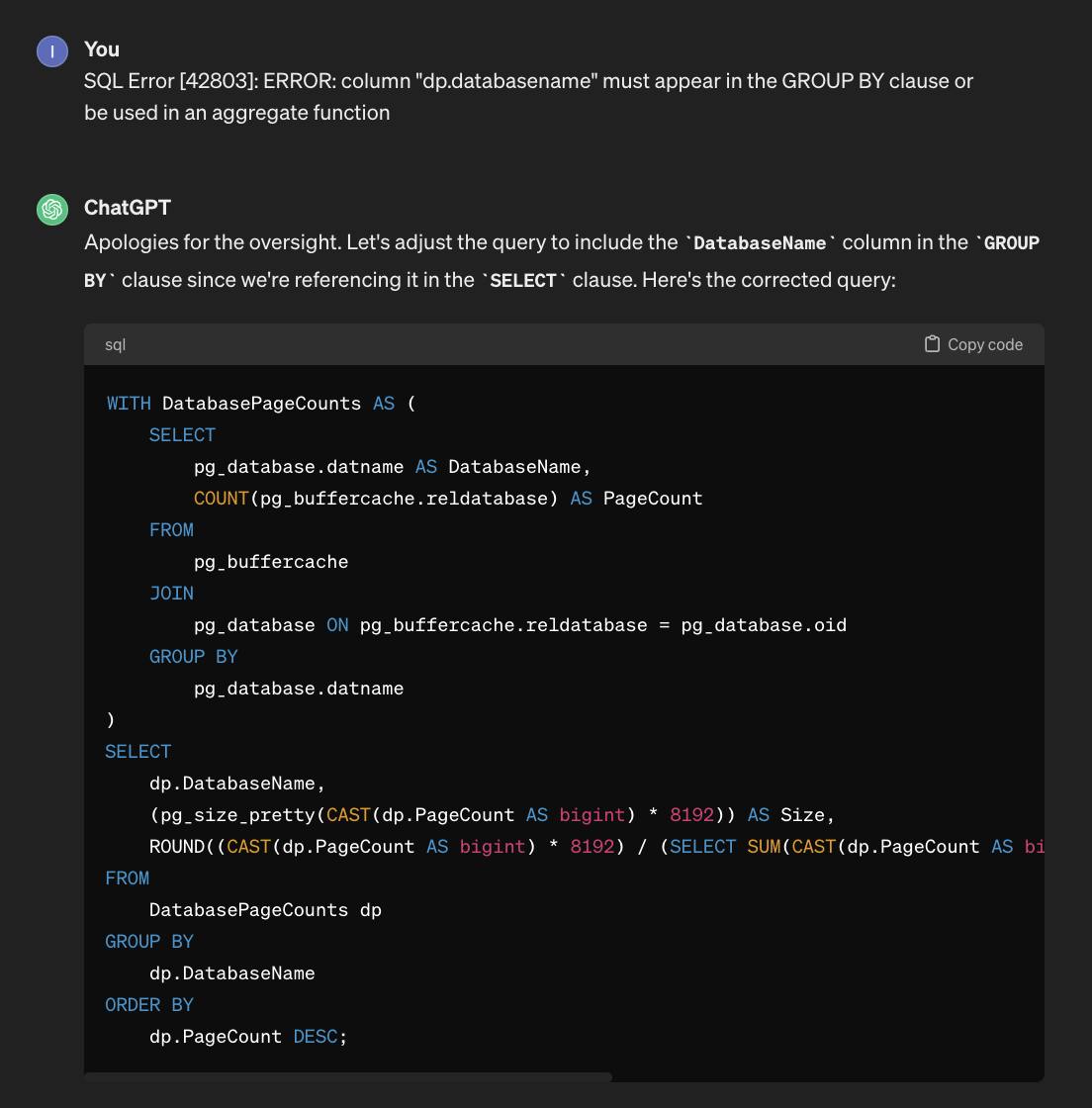

Error. Trying again

Error: SQL Error [42803]: ERROR: column "dp.pagecount" must appear in the GROUP BY clause or be used in an aggregate function.

I gave up.

Conclusion

Let's try another approach: A large context with detailed explanations what each query does, and what each columns contains.