Using Chat GPT to improve your Postgres (and MySQL) skills

A few examples how Chat GPT can help with DB-Related Tasks

By Itay Braun, CTO, Metis

ChatGPT is a prototype dialogue-based AI chatbot capable of understanding natural human language and generating impressively detailed human-like written text. In this post we'll see how it can help with DB-related tasks.

Why Should I care?

No one should remember by heart how to use each of the SQL commands. As a busy developer, you use the not-so-great Postgres official documentation and StackOverflow. However, ChatGPT can provide information and guidance on how to perform various database tasks, such as creating tables, inserting data, and querying data. Additionally, it can help by explaining database concepts and terminology, which can be useful for those who are new to working with databases so that they can perform their tasks more effectively.

Can you give me 3 real-world examples?

Show me how to write a query to calculate the size of a table and the usage of its indexes

Show me, with examples, how to write a function that calculates the diff between the sales of a any date compared to the previous day

Show python code how to use the AWS SDK to retrieve the CPU of an RDS in the last 3 days

Starting with some basic example of Prompts

A commend in the terminology of ChatGPT called prompt. It can be very details and contain multiple sentences.

I won't publish here all the screenshots, you should try it yourself. The app is free (for now).

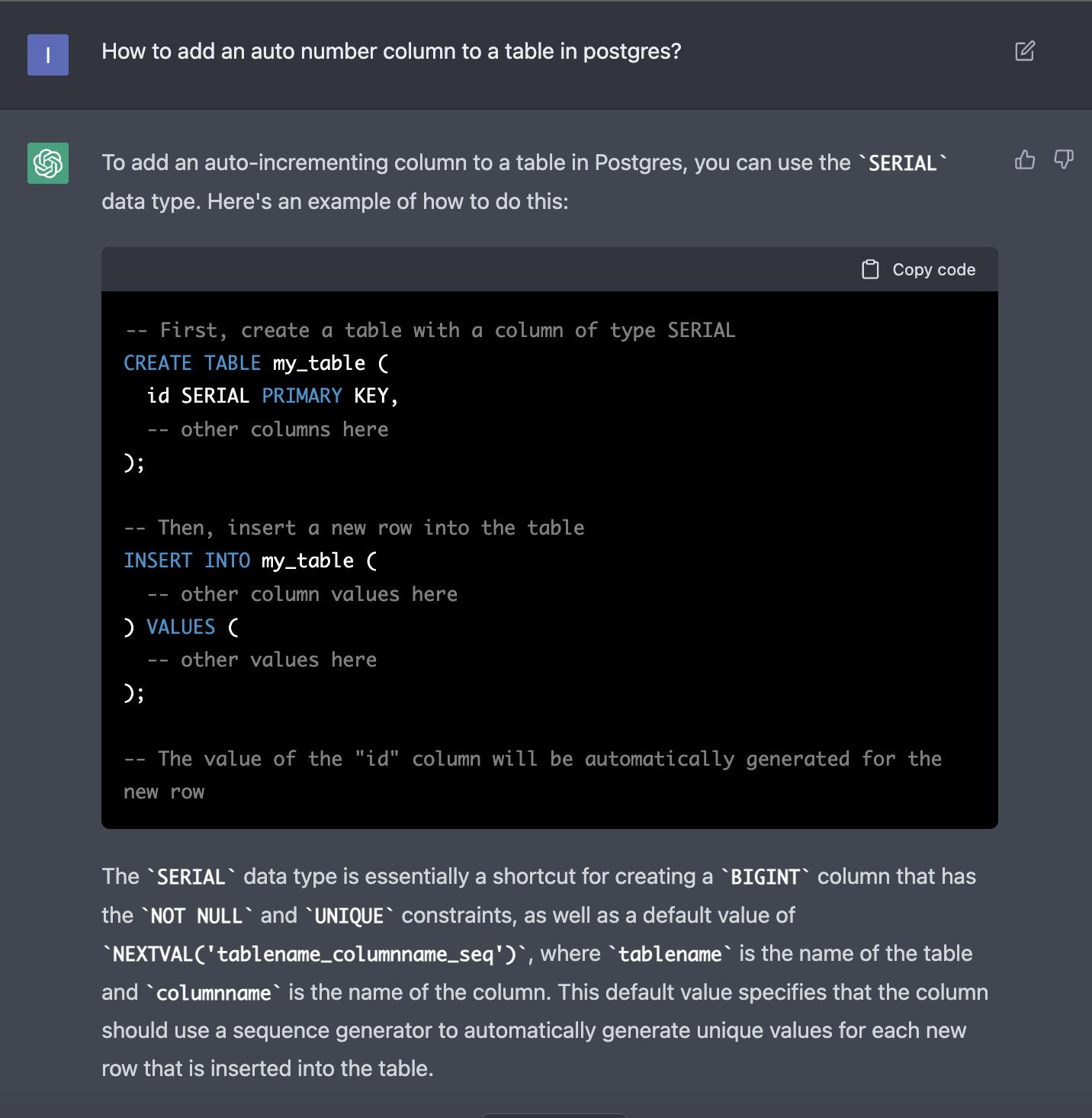

Let's start with a simple question: How to add an auto number column to a table in postgres?

As you can see, ChatGPT provides a clear and detailed answer:

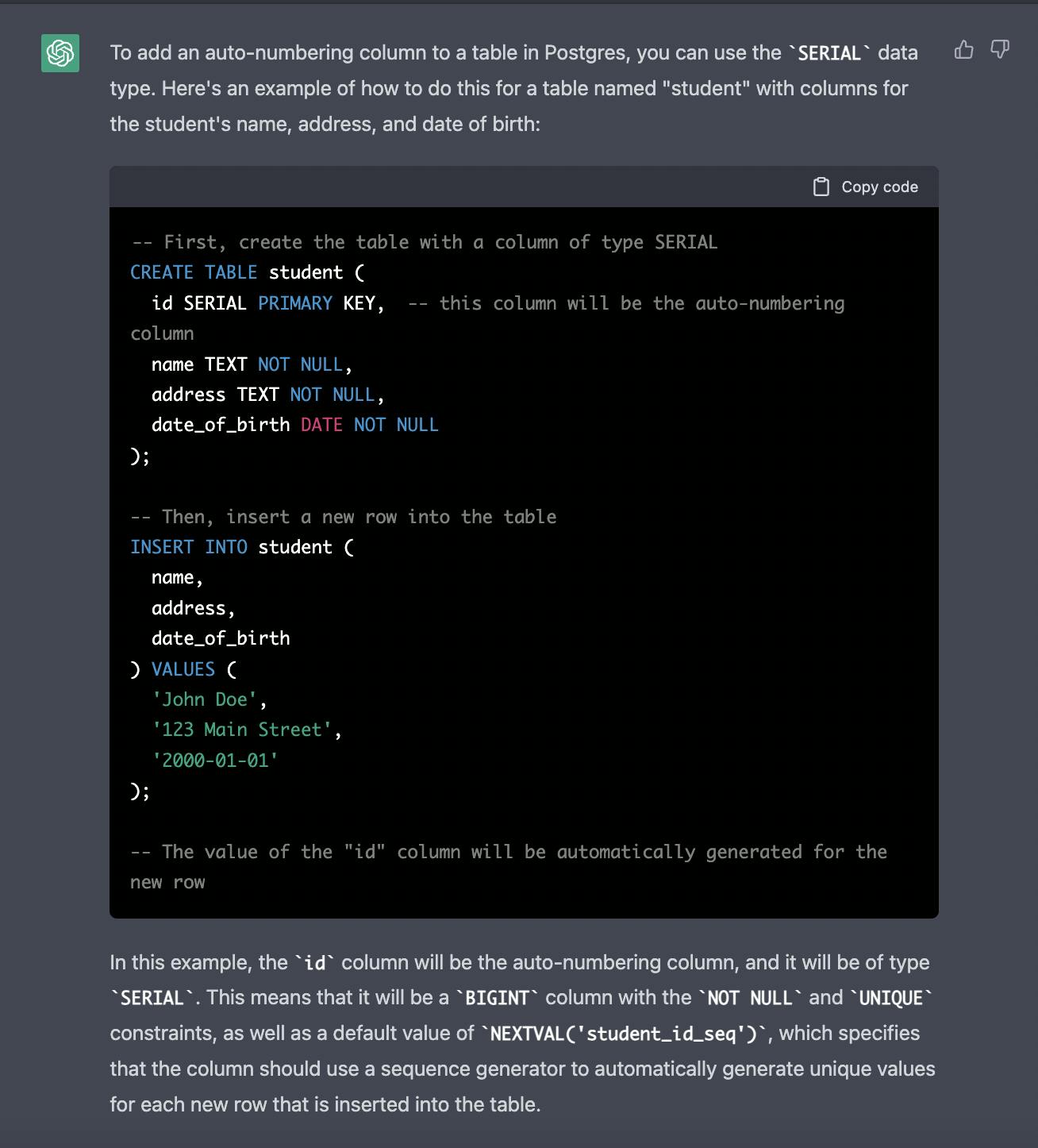

Now, let's ask the question again, but also ask to show us an example.How to add an auto number column to a table in postgres? Show me an example using the table "student" which has the columns: student name, address, date_of_birth

The results:

You can try a few more prompts. As you can see, English doesn't have to be grammatically correct. It has a pretty good understanding of

A query to show the date of tomorrow in PostgresShow only the date, without '00:00:00' in PostgresExample of psql with a multi line SQL command

At some point, ChatGPT gets the context and understands that we're talking about Postgres and no need to mention that again

Show me the size of the tablesShow the usage of indexesDefine a parameter in postgres called "extension_exists". The save to it whether the pg_cron extension exists

Advanced Prompts

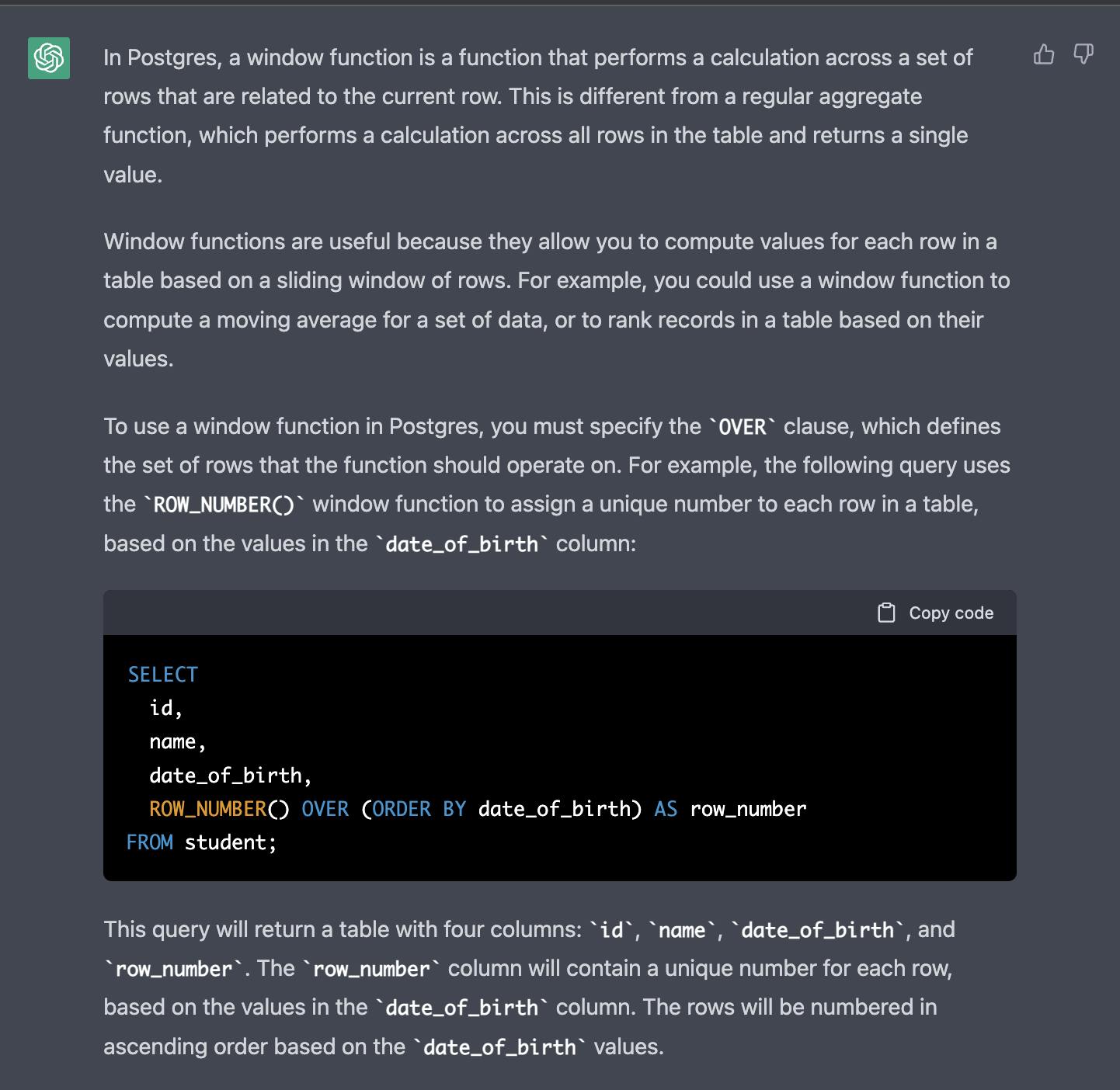

ChatGPT is really useful to learn about various DB-related subjects. For example, Window Functions. First, ask What is a window function in postgres?

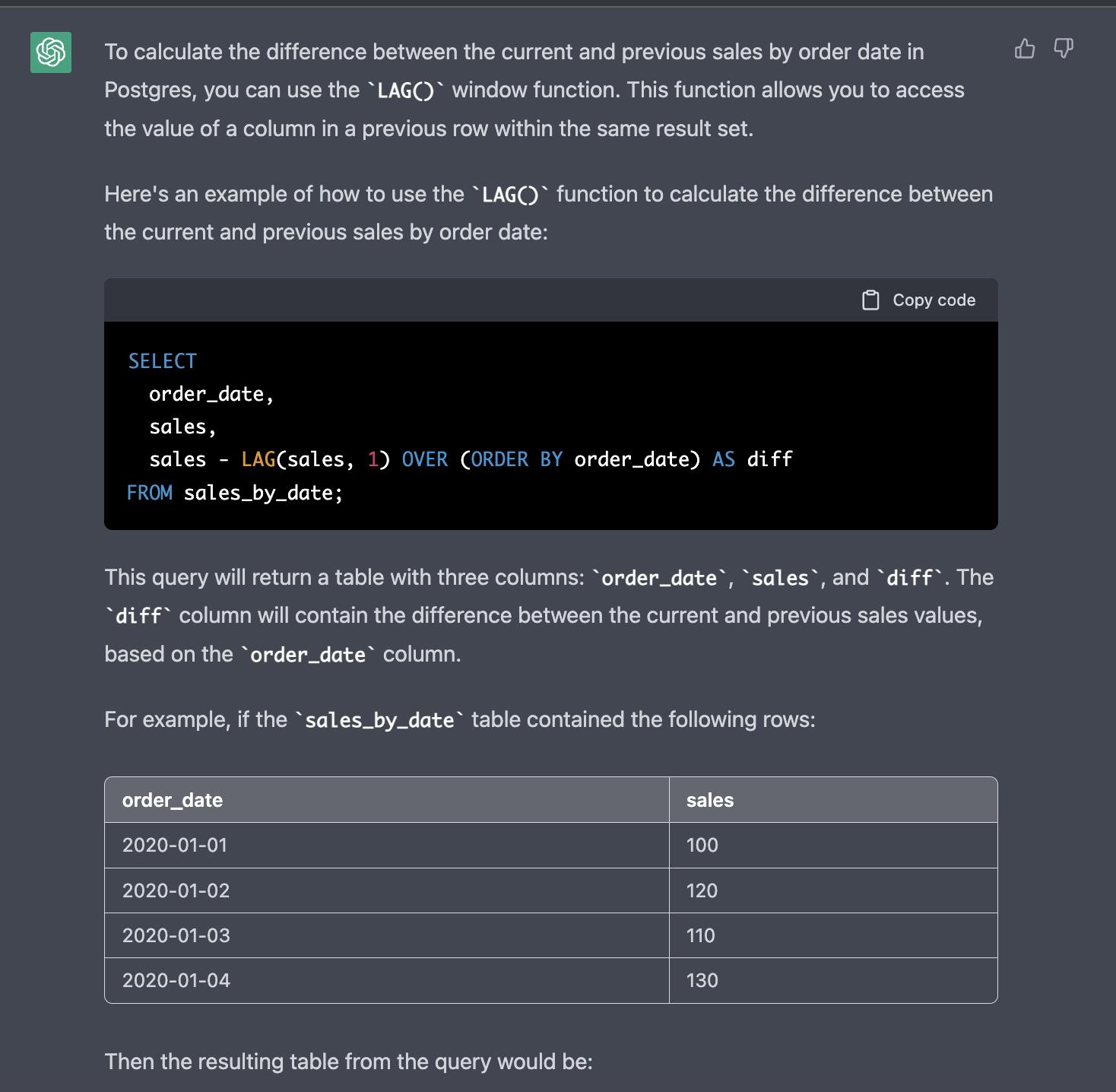

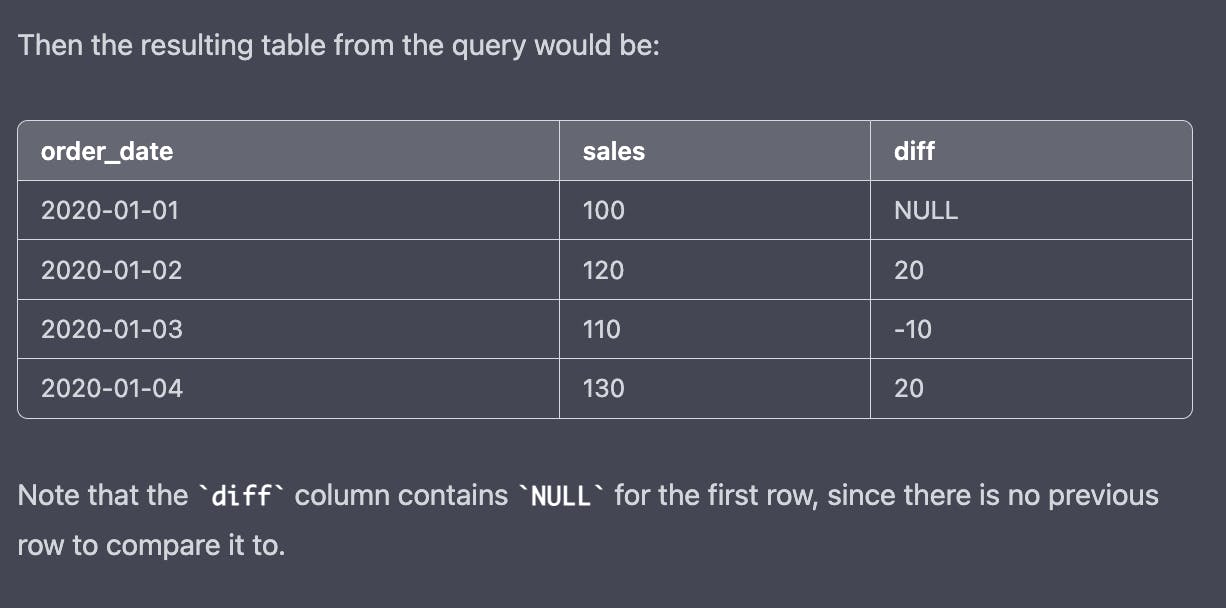

Then we can ask: Show an example of a window function in Postgres to calculate the diff between the current and previous sales by order date

The answer is long and detailed:

It can also show code examples of how to use the AWS SDK or GreatExpectations Data Quality library. Remember the model was trained about a year ago so it won't contain the latest updates.

I wrote: Show me an example of greatexpectations.io data quality tests for a python data set with a query to postgres

ChatGPT answered:

GreatExpectations is a tool for defining, documenting, and testing the quality of your data. It is written in Python and provides a framework for creating and running data quality tests on your data sets.

Here is an example of how you might use Great Expectations to define and run some data quality tests on a data set that you are querying from a Postgres database:

# First, import the necessary modules and classes from Great Expectations

from great_expectations import DataContext, expect_column_values_to_be_in_set

# Next, create a DataContext object to manage your data and tests

context = DataContext()

# Now, define your Postgres query and load the results into the DataContext

my_query = "SELECT * FROM my_table"

my_data = context.get_batch(

batch_kwargs={"query": my_query},

dataset=context.get_dataset(

"my_dataset",

batch_kwargs_generators=[

{

"my_table": {

"class_name": "QueryBatchKwargsGenerator",

"query": my_query,

}

}

],

),

)

# Define your data quality tests using the expect_* methods provided by Great Expectations

expectation_suite = context.create_expectation_suite("my_expectations")

# For example, you might expect the "status" column to have only certain values

expectation_suite.expect_column_values_to_be_in_set(

column="status",

value_set=["active", "inactive", "pending"],

result_format={

"result_format": "BASIC",

"summary_result_format": "BASIC",

},

)

# You can also expect the values in other columns to meet certain conditions

expectation_suite.expect_column_values_to_be_of_type(

column="amount",

type_="numeric",

result_format={

"result_format": "BASIC",

"summary_result_format": "BASIC",

},

)

# Once you have defined your expectations, you can run the tests on your data

results = context.run_validation_operator(

"action_list_operator",

assets_to_validate=[my_data],

expectation_suite=expectation_suite,

)

# The results of the tests will be returned as a dictionary

# You can then use the results to check whether your data meets your expectations

print(results)

In this example, we define our Postgres query and load the results into the DataContext using the get_batch and get_dataset methods. We then define two data quality tests using the expect_column_values_to_be_in_set and expect_column_values_to_be_of_type methods provided by Great Expectations. These methods allow us to specify the conditions that we expect the values in our data set to meet.

Once we have defined our expectations, we can run the tests on our data set by calling the run_validation_operator method and passing in our data and the expectation suite

Conclusion

ChatGPT is a great tool for developers, it provides very useful answers to common problems. I found it 10X valuable than StackOverflow. It can't connect to AWS and run tasks but can be very useful in quickly building advanced pipelines.

And until it is able to analyze the performance of a query (I tried, but it did a bad job), we have Metis to help.